Last week, I was invited to do a panel discussion with members of Capital Allocators China on notable trends in generative AI between the US and China. This is the China chapter of Ted Seides’s Capital Allocators community, so the audience is mostly investors. My fellow panelist was the founder of a Chinese startup using multi-modal AI to improve manufacturing; the discussion was moderated by Chee-We Ng of Oak Seed Ventures.

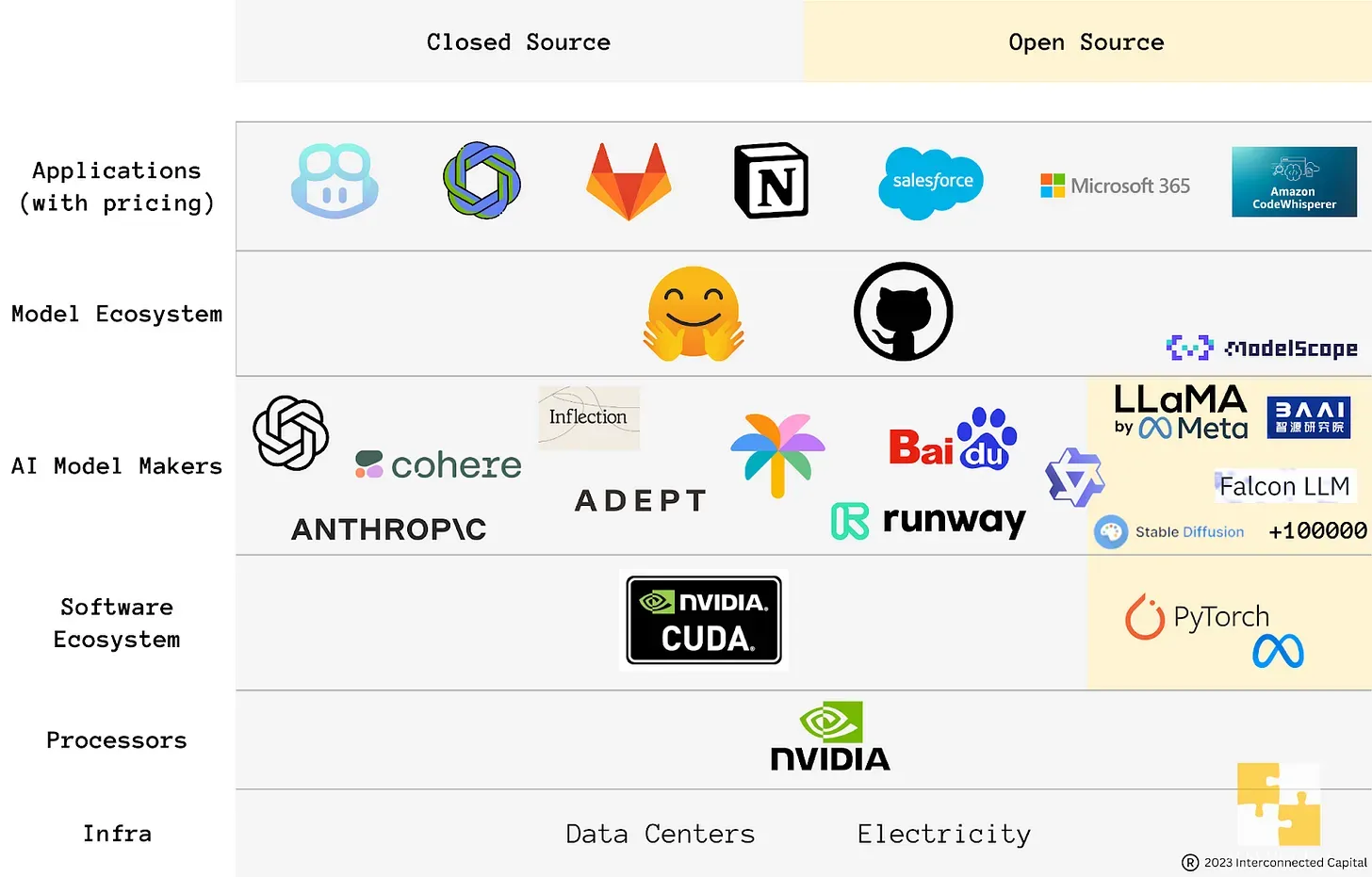

It was a members-only, closed-door event, so I won’t be able to share every single detail. Instead, this post will be a synthesis of some of the major investment themes, observations, and takeaways that emerged from the panel. I’m also including an AI ecosystem stack mapping graphic that our team made before the event to guide the discussion, which I hope can be useful to you.

A few points of explanation to properly contextualize this graphic:

- My purpose was to bring the US perspective, so I primarily mapped the major US players and only a few of the major Chinese players. The graphic is not meant to be comprehensive.

- I only included AI applications that have a pricing scheme with immediate revenue generating potential, e.g. GitHub Copilot, GitLab’s Code Suggestions, Notion’s AI add-on, Microsoft’s Office 365. I did this in order to highlight the “signal” and avoid the “noise” of the thousands of generative AI apps that are mostly experimental toys at this stage.

- Open source technologies are shaded in light yellow, the rest of the mapping are closed source or proprietary technologies.

- I purposely placed the logo of AliCloud’s LLM on the border of closed and open source, because it recently open sourced its 7 billion parameter language model, so it is partially open partially closed, in my view.

- I placed ModelScope near the corner of the “Model Ecosystem” layer as an honorable mention. It is an AI model hosting platform, similar to HuggingFace, that was started by AliCloud. It is still very new, so its influence is limited, but it got multiple mentions in Alibaba’s earnings call last week, so I expect its prominence to rise.

Now onto the big themes, observations, and takeaways.

Electricity

One under the radar aspect of the generative AI boom is the increasing demand for cheap and reliable electricity. A cluster of high-end GPUs dedicated to AI model training needs 4-times as much power as a general purpose CPU cluster. You need more power not only to fuel the computation, but also to cool down the machines.

That’s why Microsoft’s latest data center expansion to bolster capacity to serve ChatGPT and its other generative AI brethren is in Quincy, Washington, just a few miles away from Columbia River. The design decision here is to be able to directly pump water from the river to cool down these GPU-dense data centers. (It is estimated that this three-building data center cluster will need 363,000 gallons of water per day for cooling.)

CoreWeave, the dedicated AI cloud provider and a darling of the generative AI wave, announced last month that it is building its newest data center in Plano, Texas. That is no surprise considering that Texas is the largest energy producer state in the US, and the electricity-hungry GPUs should ideally be as close to the cheapest, most abundant energy source as possible. Another stealth AI cloud startup, possibly called Omniva, is building its infrastructure in the Middle East, another obviously large energy-producing region. The upstart is apparently counting on its close connection with a Kuwaiti royal family to secure cheap electricity.

The need to access affordable and reliable electricity and other forms of energy has always been an important consideration of data center design. However, generative AI’s insatiable demand for high-performing GPUs (at least at this stage of the game, since most of the focus is still on training large foundation models) has tipped the balance of the tradeoffs between proximity to end users and proximity to power.

Most cloud data centers are built to serve up Internet applications and other non-generative-AI use cases, so their locations emphasize physical closeness to end users to deliver low-latency experiences. Thus, the densest locations are places like Virginia (for US east coast), Oregon and California (for US west coast), Hong Kong and Singapore (for APAC), Frankfurt and London (for Europe), South Africa (for Africa), etc. Crypto mining farms, a special purpose kind of data center, take the tradeoffs to one extreme by prioritizing power access and cooling because the end use needs are not that high, thus places like Iceland became a prominent location.

AI-focused data centers’ tradeoffs are somewhere in between generic cloud data centers and cryptic mining. Proximity to end users are somewhat important but an arm’s length access to cheap, reliable power is arguably more important. That makes electricity and power in general – things that most of us take for granted – an important dimension in how (and where!) generative AI will evolve in the future.

Open Source Eroding Closed Source

The open source vs closed source debate was a prominent topic during our panel. Having been working deeply in the open source space as a startup operator, I’ve always thought this debate was a niche topic among engineers, open source communities, academics, and infrastructure-focused VCs. But generative AI really brought it to the forefront of many people’s minds, from larger institutional investors, to policy think tanks, to government regulators.

There are two ways to look at open vs closed in the generative AI context: big tech vs. big tech, startup vs big tech.

The big tech vs big tech dynamic is epitomized by Meta open sourcing Llama to play defense against OpenAI’s GPT, Alphabet’s Palm, and a string of well-funded foundational AI model markers, e.g. Anthropic, Cohere, Adept, Inflection, etc. Meta’s “open source as defense” strategy was explained in detail in a previous post, so I won’t repeat myself here. The key strategic takeaway is that open source can often be used to erode the moat of competing closed source technologies.

An added dimension to open source AI models is its geopolitical implications. Both AliCloud and Baidu AI Cloud have offered Llama 2 as a service on their respective clouds, as have Azure and AWS. This practice of taking open source technologies to the cloud is well-trodden and nothing remarkable, as I explained in another post. But since Llama 2 is pre-trained, using GPUs that are either hard to obtain now or completely off limit to Chinese tech companies due to export control, building off of its foundation could shorten the gap in training progress between Chinese AI model makers and their US counterparts. It is difficult to estimate how much of a difference accessing open source models makes in helping China catch up to the US, and whether that difference is meaningful. But that difference is certainly there, and wouldn't be there without open source.

The startup vs big tech dynamic is one where the startups use open source to play offense against bigger, better-resourced incumbents. Being more open, transparent, and free attracts more developer attention, mindshare, and usage, allowing a small startup to grow bigger quickly and eroding any advantage that big tech companies’ closed source solutions may have. Interestingly, as open source startups become bigger themselves, they tend to adopt a more closed posture by changing licenses and other business practices, like what Hashicorp did last week and many other similar companies did before it. This dynamic has played out many times in other layers of computing infrastructures, like servers, operating systems, and databases.

If you treat foundation AI models as yet another layer in the infrastructure stack (I do), then this pattern will emerge again. At the moment, it is a bit surprising to me that most of the well-funded foundation AI model startups are closed source. I anticipate that will change over time, because they need more developer mindshare to grow, so they will have to adopt open source practices on some level. The one exception is if these model makers decide to tackle a single industry vertical instead, shifting from building a foundation model to, for example, a healthcare model or an accounting model, then open source does not offer as many benefits.

Open source still looks small in my graphic, but it will grow and eat into closed source territory sooner or later.

Some Surprising Chinese AI Model Makers

As far as I can tell, most of the foundation AI model making in the US is concentrated in either big tech companies or startups that raised a huge amount of funding from either VCs or corporate investing arms of the same big tech companies.

The landscape is similar in China, with its own line-up of extremely well-funded AI startups and big tech companies, like Alibaba, Baidu, and Huawei. But there are also a few other surprising places where foundation AI models are being created, where no parallel currently exists in the US.

One place is Internet companies in niche verticals, like Lianjia, a large online real estate brokerage. It is a subsidiary of Ke Holdings, which is traded on the New York Stock Exchange. Lianjia’s team released a well-regarded LLM called BELLE (or Be Everyone's Large Language model Engine) that is derived from Meta’s Llama model. I have yet to see Zillow or Redfin in the US real estate vertical contributing to AI model making.

Another place is academia and government-backed research institutes. The Beijing Academy of Artificial Intelligence (BAAI), which I mentioned in my ecosystem graphic, is a nonprofit research lab with the backing of the Ministry of Science and Technology and the Beijing city government. It released an open source LLM called Aquila and hosts an annual AI academic conference that attracts leading experts like Yann LeCunn and Geoff Hinton. Even Sam Altman showed up (virtually) to this year’s conference, which we wrote about previously in the context of convening power. Two other academic institutions that have released well-regarded models are Tsinghua University and its ChatGLM, and Hong Kong University of Science and Technology and its LMFlow (a tool dedicated to fine tuning models). I haven’t seen similar activities and releases from US academic institutions or other types of research institutes, though I’m admittedly not tracking that universe closely.

Making this observation is certainly not to suggest that there has to be parallels or matching similarities between China and the US, otherwise one side is somehow ahead of or behind the other. But I do find this particular difference striking in that the US landscape is very industry concentrated, while the Chinese landscape is a bit more spread out, thus worth noting.

I hope this synthesis of my Capital Allocators China panel, along with the AI ecosystem stack mapping graphic, is of some value to you. To quote Roy Amara, the Stanford computer scientist, again:

“We overestimate the impact of technology in the short-term and underestimate the effect in the long run.”

Generative AI holds tremendous potential and profound implications, but it still has a long way to go. Patience is key. And patience will pay off big time.